The changes focus on safer direct messaging

nudity protection, and improved security

for adult-managed accounts

Web Desk

Karachi: Meta has introduced a new set of safety tools and updates across Instagram and Facebook, aimed at enhancing protection for teens and children. The changes focus on safer direct messaging, nudity protection, and improved security for adult-managed accounts that prominently feature minors.

Stronger Messaging Controls and Reporting Tools for Teens

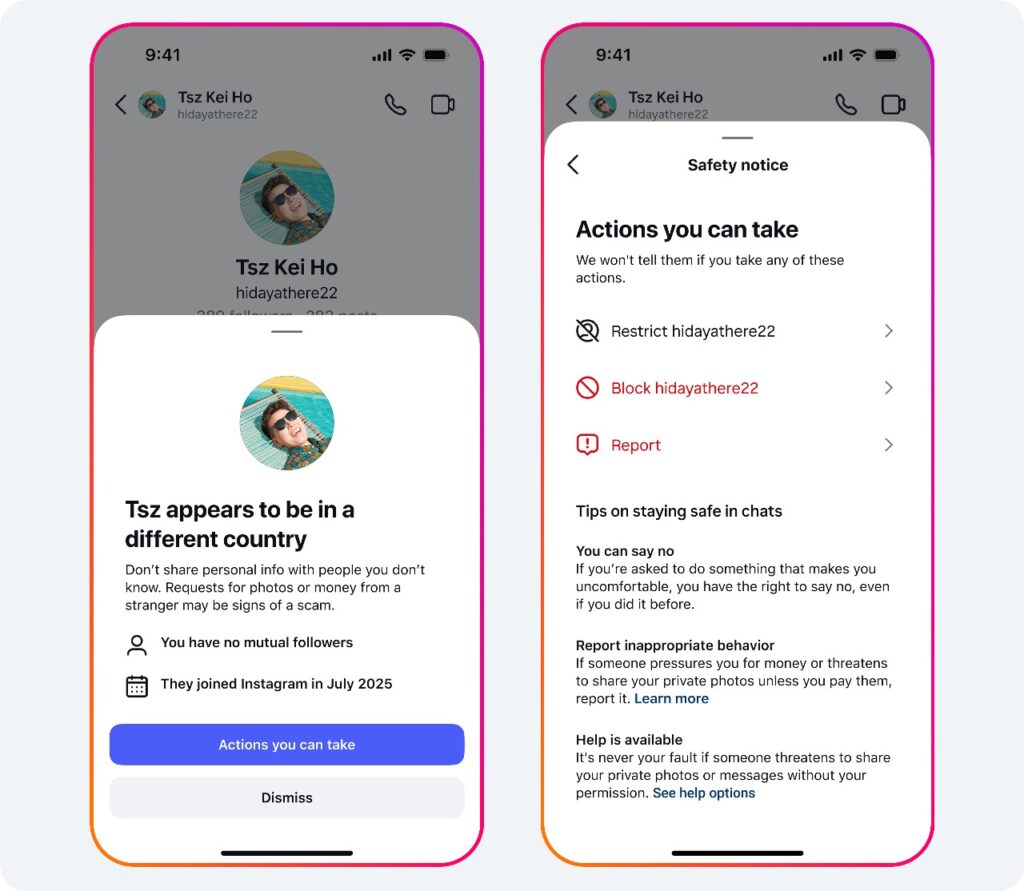

Teen accounts will now display more contextual information in direct messages, including safety tips, the sender’s account creation date, and a new streamlined option to block and report users simultaneously. In June alone, teens utilized Meta’s safety notices to block 1 million accounts and reported another 1 million.

New Tools to Fight Sextortion and Cross-Border Threats

To combat cross-border sextortion scams, Meta is launching a new “Location Notice” feature on Instagram. This alert helps users recognize when they are chatting with someone based in another country—a common tactic used to target young people. More than 10% of users tapped the alert for more information on how to stay safe.

Nudity Protection and Content Sharing Warnings Expanded

Meta’s nudity protection tool, which automatically blurs suspected nude images in DMs, remains widely adopted—99% of users kept it enabled in June. The feature has also discouraged content sharing, with nearly 45% of users deciding not to forward explicit images after receiving a warning.

Enhanced Safeguards for Child-Focused Accounts

For adult-managed accounts that feature children—such as those run by parents or talent agents—Meta is implementing Teen Account-level protections. These include strict message controls and the use of Hidden Words to filter out offensive comments.

In addition, Meta will reduce the visibility of these accounts to potentially suspicious users, limiting their exposure in search and recommendation feeds. Features like gift acceptance and paid subscriptions have also been disabled for such accounts.

Aggressive Action Against Predatory Behavior

As part of its ongoing efforts to prevent exploitation, Meta removed approximately 135,000 Instagram accounts for posting sexualized comments or requesting explicit images from child-focused accounts. Another 500,000 linked accounts were also removed from both Facebook and Instagram. Meta continues collaborating with tech partners through the Tech Coalition’s Lantern program to block repeat offenders from reappearing on other platforms.